EQing in Octaves: Part 1

This is the first of a three-part email series designed to simplify your approach to EQ and help you make better mixing decisions.

We measure sound frequencies on a logarithmic scale in Hertz (Hz), or cycles per second. While essential for describing audio, this unit can feel abstract and disconnected to how we think about and create music. For example, a frequency of 100 Hz means a sound wave vibrates or oscillates for one full cycle at 100 times per second.

Musical notes are sound waves oscillating at specific cycles per second, and an octave is simply doubling or halving those oscillations to move up or down the scale.

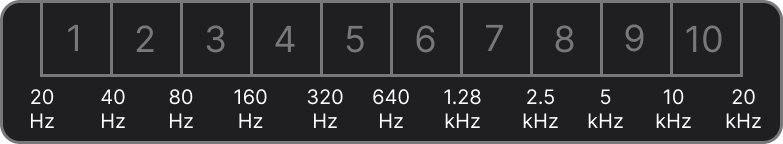

Here’s where it gets tricky: there are only 12 notes in an octave, regardless of whether you're looking at 30–60 Hz or 3,000–6,000 Hz. This is because octaves follow a logarithmic scale—frequencies double with each octave (e.g., 20 Hz, 40 Hz, 80 Hz, 160 Hz, and so on)

This doubling can seem daunting, especially on an EQ graph, but understanding it can simplify how you approach EQing and balancing a mix.

Simplifying EQ: From Hertz to Octaves

The human ear picks up frequencies from 20 Hz to 20,000 Hz—a pretty wide range. But sound doesn’t stop at the limits of our hearing range. There are sound waves below what we can hear (infrasonic) and above it (ultrasonic). However, these low frequencies are usually filtered out by DC filters, and high frequencies by anti-aliasing filters, depending on the sample rate, of course.

Focusing on what we can hear, if we think in terms of musical octaves instead of raw numbers, that big range of 20 Hz to 20,000 Hz boils down to just under 10 octaves. That’s 10 manageable “bands” to work with, instead of 20,000 individual frequencies. Much easier to wrap your head around, right? Those 10 octaves can be broken down like this...

The 'middle' frequency of our hearing range, numerically speaking, is around 640 Hz—an unexpected midpoint, but it makes sense when we think in terms of octaves. There are about 5 octaves below 640 Hz (down to 20 Hz) and 5 above (up to 20 kHz), meaning our hearing is split evenly in terms of octaves.

Relating EQ to musical octaves makes frequency adjustments feel more intuitive and grounded in how we naturally think about music. When EQing, I typically cut with narrower bands, often about an octave wide, to address specific issues. On the other hand, when boosting, I prefer using wider bells, spanning two or more octaves, to shape the tone more musically.

However, not all octaves are perceived equally. Our ears are most sensitive to the 1–5 kHz range (roughly octaves 6–8 on the frequency spectrum), where our hearing is naturally tuned to detect detail—an evolutionary trait that likely developed for survival. This range encompasses the majority of human speech, and our sensitivity to subtle nuances within it has been essential for communication and fostering social bonds throughout history.

That may be a bit too much evolutionary context for this conversation about EQing your mix, but this 2.5-octave range demands our attention. Our ears are particularly attuned to this area and are highly sensitive to imbalance, excessive transients, and shifts in loudness. Getting it right can dramatically affect how the listener perceives loudness, balance, and presence in your mix.

Thinking in octaves can transform your approach to EQ, shifting it from a technical grind to a more natural, gestural, and musical process. By mastering the fundamentals of EQ, compression, and saturation, you’ll discover that many “problem-solving” tools—like resonance suppressors (e.g., Soothe 2)—are often used to address balance issues that could be resolved with better EQ techniques. While these tools have their place in specific scenarios, they are frequently overused in modern music production.

By understanding how octaves relate to the music we create, you can map your EQ decisions more effectively to the issues you hear in a mix. This empowers you to make more informed choices and reduces reliance on complex modern resonance tools, which can inadvertently introduce unwanted artifacts that often go unnoticed.

In Part 2, we’ll dive deeper into the individual frequency bands. We’ll talk about common issues, how to tackle them, and how to keep your mixes musical and balanced.

Have questions? Just hit reply, and we’ll get back to you. You can also tag us on instagram—we’re always happy to connect!

Be well,

Ryan Schwabe

Grammy-nominated and multi-platinum mixing & mastering engineer

Founder of Schwabe Digital